The Complete Hands-On Introduction To Airbyte

The Complete Hands-On Introduction To Airbyte

Published 3/2024

MP4 | Video: h264, 1920x1080 | Audio: AAC, 44.1 KHz

Language: English

| Size: 1.79 GB[/center]

| Duration: 3h 16m

Get started with Airbyte and learn how to use it with Apache Airflow, Snowflake, dbt and more

What you'll learn

Understand what Airbyte is, its architecture, concepts, and its role in the MDS

Install and set up Airbyte locally with Docker

Connect Airbyte to different data sources (databases, cloud storages, etc)

Configure Airbyte to send data to various destinations (DWs, databases)

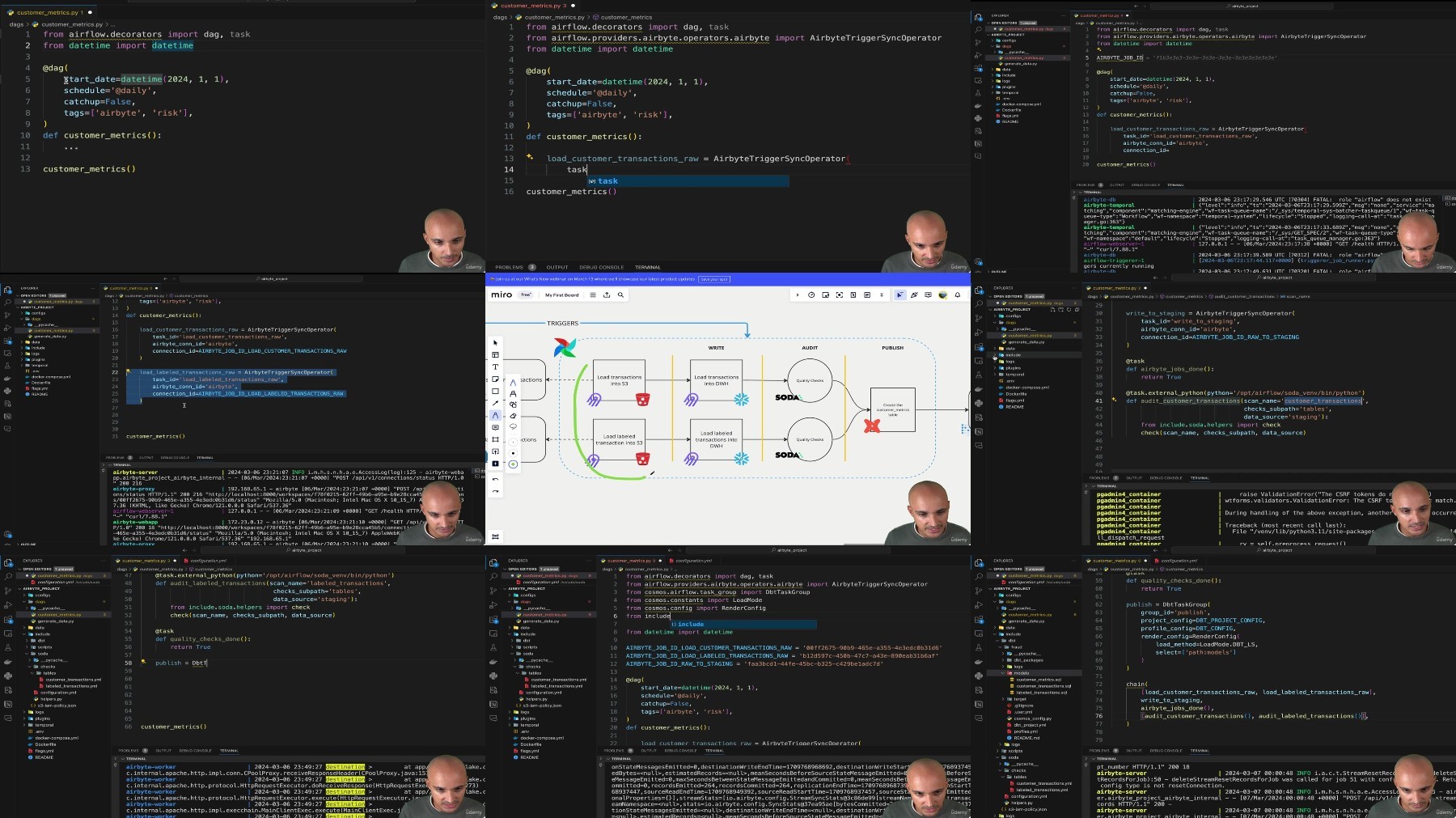

Develop a data pipeline from scratch with Airbyte, dbt, Soda, Airflow, Postgres, and Snowflake to run your first data syncs

Set up monitoring and notifications with Airbyte

Requirements

Prior experience with Python

Access to Docker on a local machine

A Google Cloud account with a billing account (for BigQuery)

Description

Welcome to the Complete Hands-On Introduction to Airbyte!Airbyte is an open-source data integration engine that helps you consolidate data in your data warehouses, lakes, and databases. It is an alternative to Stich and Fivetran and provides hundreds of connectors mainly built by the community. Aibyte has many connectors (+300) and is extensible. You can create your connector if it doesn't exist. In this course, you will learn everything you need to get started with Airbyte:What is Airbyte? Where does it fit in the data stack, and why it is helpful for you.Essential concepts such as source, destination, connections, normalization, etc.How to create a source and a destination to synchronize data at ease.Airbyte best practices to efficiently move data between endpoints.How to set up and run Airbyte locally with Docker and KubernetesBuild a data pipeline from scratch using Airflow, dbt, Postgres, Snowflake, Airbyte and Soda.And more.At the end of the course, you will fully understand Airbyte and be ready to use it with your data stack!If you need any help, don't hesitate to ask in Q/A section of Udemy, I will be more than happy to help!See you in the course!

Overview

Section 1: Welcome!

Lecture 1 Welcome!

Lecture 2 Prerequisites

Lecture 3 Who am I?

Lecture 4 Learning Advice

Section 2: Airbyte Fundamentals

Lecture 5 Why Airbyte?

Lecture 6 What is Airbyte?

Lecture 7 The Core Concepts

Lecture 8 The Core Components

Lecture 9 Why not Airbyte?

Lecture 10 Airbyte Cloud or OSS?

Section 3: Getting started with Airbyte

Lecture 11 Introduction to Docker (Optional)

Lecture 12 Running Airbyte with Docker

Lecture 13 The Airbyte UI tour

Lecture 14 The Bank Pipeline

Lecture 15 Create your first source (Google Sheets)

Lecture 16 Create your first destination (BigQuery)

Lecture 17 Configure your first connection

Lecture 18 Make your first sync!

Lecture 19 Raw tables and additional columns?

Lecture 20 Connector classifications (Certified, Community, etc)

Section 4: Advanced Concepts

Lecture 21 How does a sync work?

Lecture 22 Side notes for Postgres

Lecture 23 Discover the sync modes

Lecture 24 Handling schema changes

Lecture 25 What is Change Data Capture (CDC)?

Lecture 26 Enable CDC with Postgres

Lecture 27 Syncing data between Postgres and BigQuery using CDC

Lecture 28 The Sync Modes cheat sheet

Lecture 29 CDC under the hood

Section 5: The Fraud Project

Lecture 30 Project overview

Lecture 31 Learning recommendations

Lecture 32 The Setup and requirements

Lecture 33 The Data Generators (Python Scripts)

Lecture 34 Quick introduction to Apache Airflow

Lecture 35 Let's generate some data!

Lecture 36 Set up the S3 bucket with the user

Lecture 37 Create the AWS S3 destination with Airbyte

Lecture 38 Create the Postgres to S3 connection with Airbyte

Lecture 39 Create the MySQL source with Airbyte

Lecture 40 Create the MySQL to S3 connection with Airbyte

Lecture 41 What's the Write Audi Publish pattern?

Lecture 42 Create the AWS S3 source with Airbyte

Lecture 43 Create the Snowflake destination with Airbyte

Lecture 44 Create the Raw to Staging connection with Airbyte

Lecture 45 Quick introduction to Soda

Lecture 46 Write data quality checks with Soda

Lecture 47 Create the customer_metrics table with dbt

Lecture 48 Create the Fraud Data Pipeline with Airflow

Lecture 49 The pipeline in action!

Data Engineers,Analytics Engineers,Data Architects

The Complete Hands-on Introduction to Airbyte